“How to Read an AI Image: The Datafication of a Kiss” featured on Cybernetic Forests, and the second chapter of “Your Computer is on Fire,” titled “Your A.I. is human,” by Sarah T. Roberts, collectively illuminate the intricate interplay between AI, human experience, and the ethical considerations entrenched within our engagements with the digital realm.

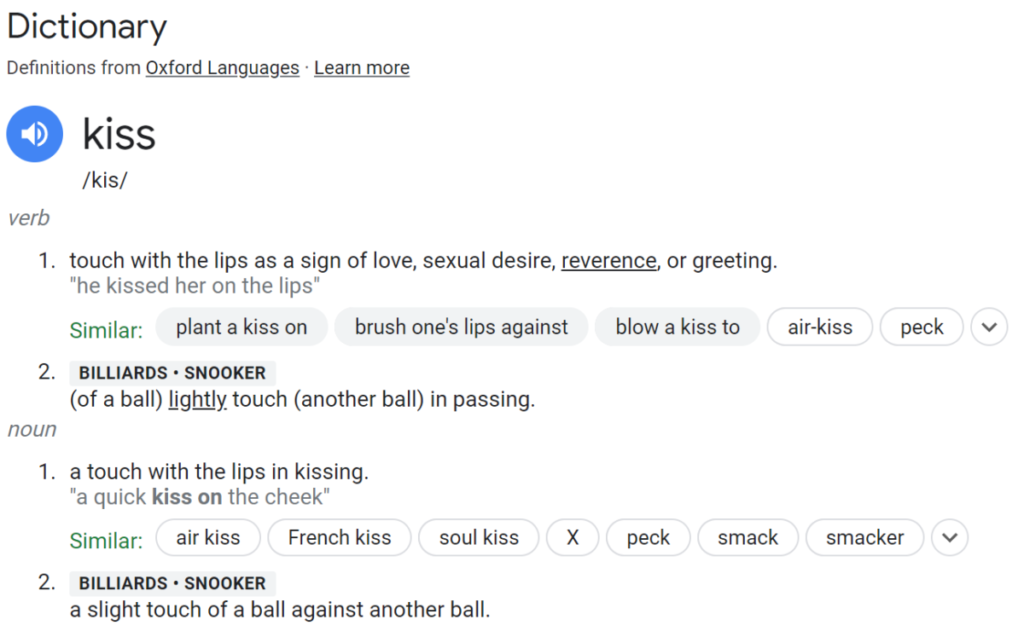

The examination of human expression “datafication” elaborates on the subtleties of deciphering AI-generated images, emphasizing the emotion-laden spectacle of a kiss. I find it intriguing that constituting such an image is fascinating, given that there is a separation between A.I. and human understanding of a kiss. Based on the A.I. generated photos via DALLE3, it seems to have taken the objective approach to formulate the image based on the definition.

Despite the endless media available for A.I. to draw from, it’s pretty interesting that the pictures weren’t as seamless as I would think.

The chapter “Your AI is Human” effectively confronts the overlooked reality that human influence resides beneath every line of code and algorithm. Roberts criticizes the human labor woven into AI systems’ creation and consistent upkeep.

But as Roberts states in the subsection “Human and AI in Symbiosis and Conflict” that:

“First, what we can know is that AI is presently and will always be both made up of and reliant upon human intelligence and intervention. This is the case whether at the outset, at the identification of a problem to be solved computationally, a bit later on, with the creation of a tool based in algorithmic or machine-learning techniques;

When that tool is informed by, improved upon, or recalibrated over time based on more or different human inputs and intervention; (37) or whether it is simply used alongside other extant human processes to aid, ease, speed, or influence human decision-making and action-taking, in symbiosis or perhaps, when at odds with a human decision, in conflict” (64).

This passage underscores the ethical obligations accompanying human endeavors and the responsibilities or accountability that seem to be lacking in the A.I. back rooms.

The connection between these two pieces exemplifies the responsibility that we, as humans, have when engaging with AI-generated content. I think the raw fascination with AI is the fact that the guardrails of AI aren’t fully formed, meaning that human evils can be left unmoderated on the internet without a person being held accountable. Because of that, I feel that humans have found themselves in a perpetual cycle of data creation and erasure of boundaries between the digital and the human.

Questions:

- Is it inherently “bad” that the A.I. model is based on humans?

- What influence would women introduce if made an integrated part of programming A.I.?

- Would introducing stricter guardrails insight upset United States citizens if people see it as a form of limiting their right to freedom of speech?

Leave a Reply