The Apollo 15 Learning Hub project was conceived in the Fall of 2016 and launched in late July, in commemoration of the 50th anniversary of the Apollo mission. The Emory Center for Digital Scholarship (ECDS) and the project’s partners developed the learning hub to assemble, preserve, and make available primary source records of Apollo 15 for research, education, and preservation, and as an example of a uniquely human endeavor. The Apollo 15 mission relied on a Lunar Module (LM), known as the “Falcon,” which detached from the Command and Service Module (CSM) and descended to the lunar surface. In a previous blog post, ECDS Visual Information Specialist Arya Basu gave a brief explanation of his work on the Hub. In this follow-up post, Dr. Basu provides more technical insight into the 3D lunar module simulation featured on the website (linked below).

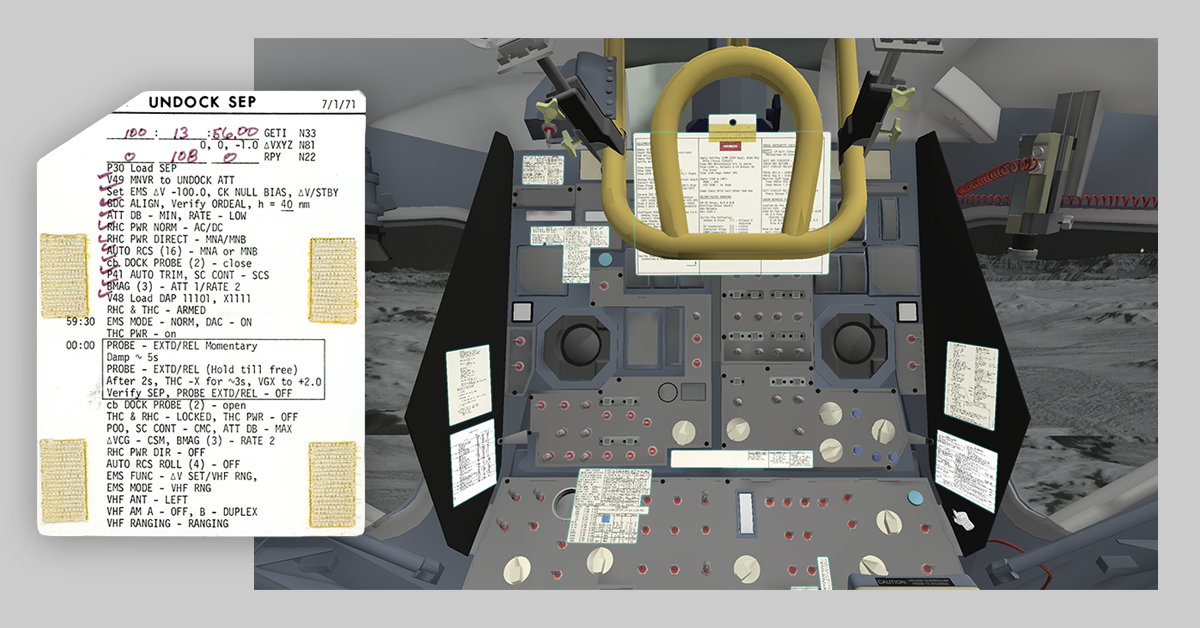

In recreating the Apollo 15 LM, Falcon, we had to capture the moment right after the Apollo 15 crew landed on the surface of the moon. To make the simulation as immersive and accurate as possible, we placed LM Cue Cards on the main control panel of the LM interior and executed detailed 3D modeling of the interior of the LM based on detailed anatomical research of the LM. Users can interact with the 3D model as events happened in 1971, with original video footage of the LM’s lunar descent preceding the simulation.

In recreating the Apollo 15 LM, Falcon, we had to capture the moment right after the Apollo 15 crew landed on the surface of the moon. To make the simulation as immersive and accurate as possible, we placed LM Cue Cards on the main control panel of the LM interior and executed detailed 3D modeling of the interior of the LM based on detailed anatomical research of the LM. Users can interact with the 3D model as events happened in 1971, with original video footage of the LM’s lunar descent preceding the simulation.

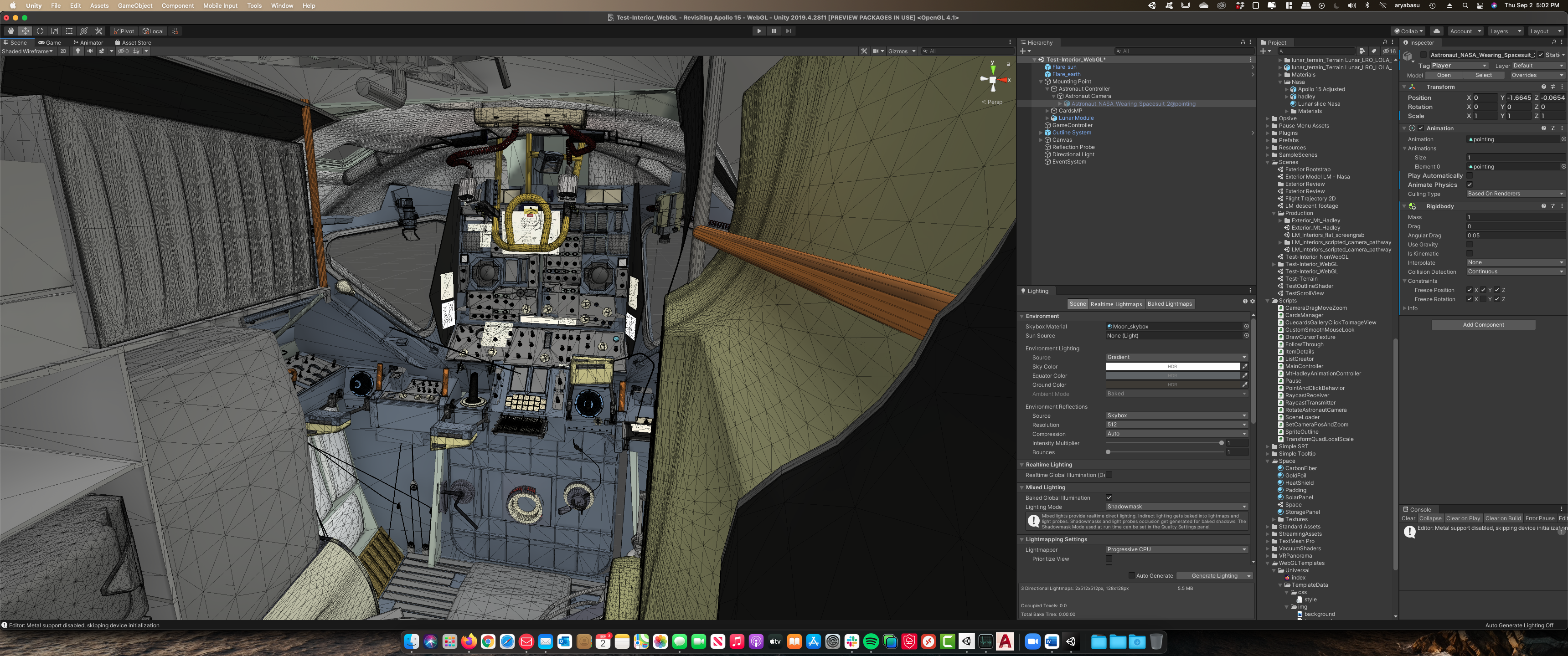

One of the technical challenges of rendering a complex 3D environment like the Apollo 15 LM interior comes down to the graphical horsepower. In the early days of computer graphics (~1987), researchers[1] argued that the real world would translate into 80 million polygons per picture in raw computation. Based on this number, if we want to have a photorealistic experience, numerically it would translate to a virtual environment with 800 million polygons per sec with an update rate of ten frames per second. In our current 3D model of the Falcon, the first-person camera renders approximately 850K triangles and roughly 800K vertices at any given moment during the interactive experience. Streaming this much information through the web—with the limited graphical resources available to a typical modern web browser—could stretch any computer thin.

In order to preserve photorealism while balancing the real-time needs of our Apollo 15 LM interior, we implemented a few computer graphics optimization techniques in layers. First, we baked[2] all scene lighting information, including shadow information and global illumination, using Shadowmask lighting mode. This technique eliminated our need to re-render all lighting information every update frame. Secondly, we used a level-of-detail (LOD) optimization technique to render out 3D geometry with varying levels of details. This approach helped eliminate the need to render the highest possible resolution of all scene geometry at all times, even when they are out of bounds of the interactive camera.

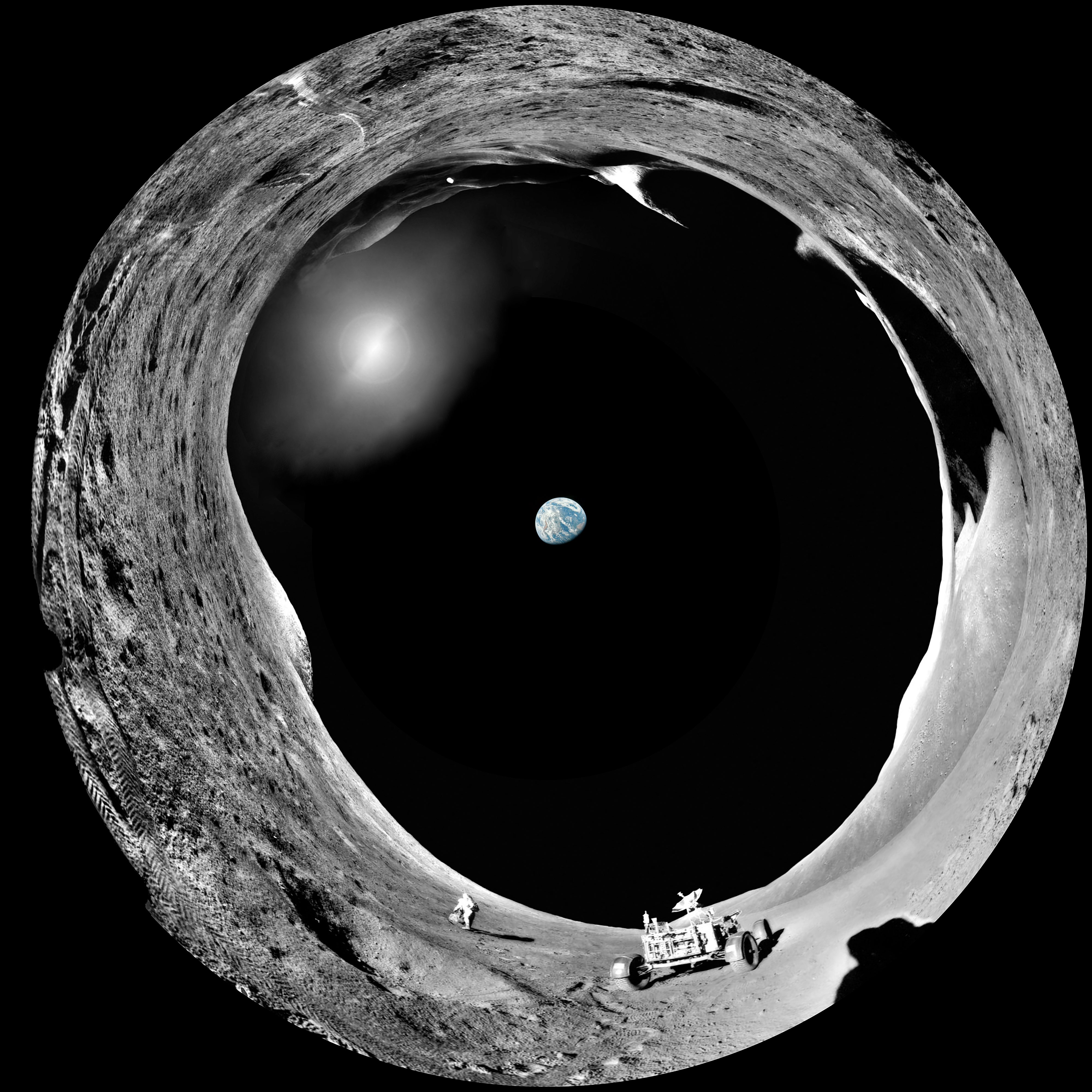

In addition to the recent Apollo 15 Learning Hub website launch, our team has collaborated with the Emory Libraries Exhibitions Team to curate and display an Apollo 15 Learning Hub physical exhibit on the 3rd floor of the Woodruff Library on campus. Our exhibition includes several posters and displays, as well as a hemispherical dome where media is projected overhead. This set-up is a unique experiment into creating an immersive installation in an open space, offering the same immersive experience, at scale, for multiple audiences simultaneously in one location.

The dome-shaped mediascape showcases an animated panorama of the lunar terrain with Col. David Scott (Apollo 15 Commander) examining a lunar boulder (see figure below). The panorama was collected by James B. Irwin (Apollo 15 Lunar Module Pilot) at Station 2, near St. George (a lunar crater in the Hadley-Apennine region). The large hill to the left of the rover is the summit of Mons Hadley Delta, in the northern portion of the Moon’s Montes Apenninus.

In the panorama photo above, we stitched together the shot and added the Earth based on Commander David Scott’s personal description of the Earth’s position in the sky. The photograph of the Earth was taken by Command Service Module Pilot Alfred Worden. You can see the the Sun rising on the left, as the photos were taken during a lunar morning.

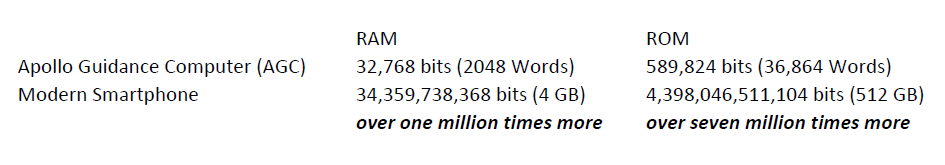

Since that eventful lunar landing in 1971, the general field of computing has come a long way. In contrast to the Apollo Guidance Computer (AGC) of the day, which had limited RAM (random access memory) and ROM (read-only memory) capacities, modern computers allow us to more easily render the entire lunar terrain in 3D. The screenshot below shows a size comparison between the working memory footprint of the AGC versus a modern smartphone.

The Apollo 15 Learning Hub highlights the Apollo-era technologies and scientific developments that enabled the mission’s success. We hope that the Hub allows users (scholars and enthusiasts alike) to understand more about the unique human endeavor that was the Apollo 15 lunar landing, 50 years later.

[1] Cook, Robert L., Loren Carpenter, and Edwin Catmull. “The Reyes image rendering architecture.” ACM SIGGRAPH Computer Graphics. Vol. 21. No. 4. ACM, 1987.

[2] “Baking” means saving information related to a 3D mesh into a texture file, freezing and recording the result of a computer process to save extra CPU cycles.