Polyglot or Noob?

by Kaitlynn Love

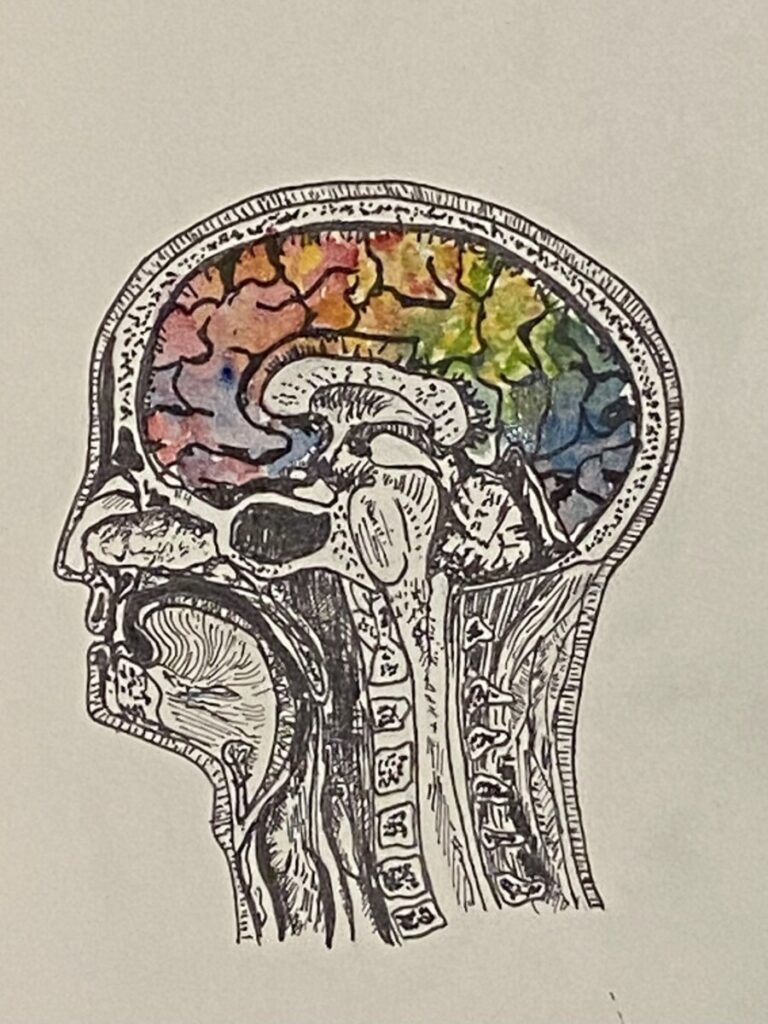

art by Anonymous

Language is one of the primary ways we communicate with each other, and it is a fundamental aspect of human social behavior that is rapidly acquired early in life. Languages can be sound, sign, and tactile based, and language acquisition mechanisms differ depending on the time period during which the acquisition occurs. Second language acquisition may be more difficult for individuals due to the alternative mechanisms that are used beyond the sensitive period, a time span during which a young individual’s brain is especially malleable and shaped by experiences. Understanding the differences between the mechanisms used during versus beyond this period may be helpful in explaining some of the specific challenges involved with second language acquisition.

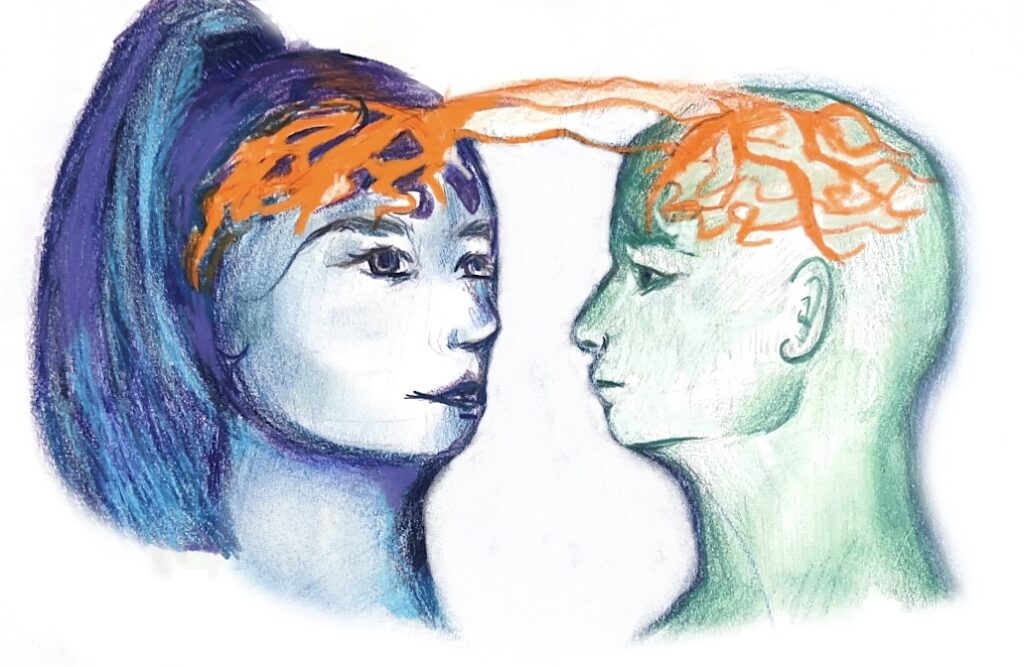

Learning a new language naturally occurs during the sensitive or critical periods when children have access to language exposure. During these specific time periods, the brain is able to rapidly learn a language due to cognitive processing capabilities and neural plasticity. Neural plasticity involves the brain modulating its functions and connections to accommodate newly acquired information, such as exposure to a new language. The neural plasticity in children’s frontotemporal systems allows for a large variety of languages to be acquired, and it has been demonstrated that there are significant similarities in language acquisition regardless of the modality or style. In particular, deaf children that have access to sign language during the sensitive time period acquire sign language using similar neural mechanisms to that of hearing children who use spoken language [1]. Access to language exposure during sensitive time periods is essential for long-term language proficiency. In cases with deaf children, research has shown that individuals with late exposure to sign language have less activation of the left frontotemporal pathways used for language comprehension [2]. The brain attempts to compensate for this by relying on other neural pathways. Typically, indications of strong right-side activity within the brain implies a lack of the left-side neural connections which form in early youth. However, even though the brain attempts to compensate for the lack of adequate exposure, there are still significant delays in language comprehension and proficiency [2]. Since language is a fundamental aspect of our social interactions, it is important that babies are screened for hearing impairments as screening allows caregivers to adjust their approach to ensure that the babies have adequate exposure to language.

Language acquisition that occurs in early life involves left hemisphere specialization [1]. Two important areas in the left hemisphere are Wernicke’s area and Broca’s area. Wernicke’s area refers to the left posterior superior temporal gyrus and the supramarginal gyrus, which are in front of the occipital lobe at the back of the brain, and Wernicke’s area is routinely referenced in discussions about the comprehension of language [3]. Recent research has supported that comprehension of key aspects of a first language is possible due to significant left temporal and inferior parietal lobe involvement, which demonstrates that speech comprehension is not isolated to Wernicke’s area. The left temporal and parietal lobes are located between the left frontal lobe and the left occipital lobe at the back of the brain, and their involvement indicates a more extensive language comprehension network. Additionally, Wernicke’s area appears to play a role in speech production [3]. Another area of the brain involved with language is the Broca’s area, which is located in the left inferior frontal gyrus, which is at the bottom of the frontal lobe [4]. It is traditionally defined as the key area involved with speech production, and modern research has demonstrated that the Broca’s area plays a vital role in sending information across the broader speech production neural networks. This contradicts earlier ideas that speech production is isolated to the Broca’s area [4]. Both of these findings demonstrate the large neural interactions between different areas of the left hemisphere that are responsible for language, and these interactions allow for language acquisition in early life to be successful long term.

The acquisition of a second language is increasingly valuable in the modern world. Bilingualism is also beneficial on an individual level because of the associated cross-domain transfer and cognitive control observed throughout the lifespan [5]. When compared to monolingual individuals, bilingual individuals may experience improvements in non-linguistic general and executive functions, such as switching between tasks and selective monitoring [5]. The sensitive period for language learning also has implications on second language acquisition, but recent data suggests that the sensitive period appears to extend until adolescence for second language acquisition [6]. Additionally, while acquiring a first language after the sensitive period has significant consequences on socialization and cognitive development, the consequences associated with missing the sensitive period for second language acquisition are not as substantial. Limited proficiency in a second language can harm communication efforts within that language, but the stakes are lower for the second language in the regard that it does not interfere with typical development throughout childhood.

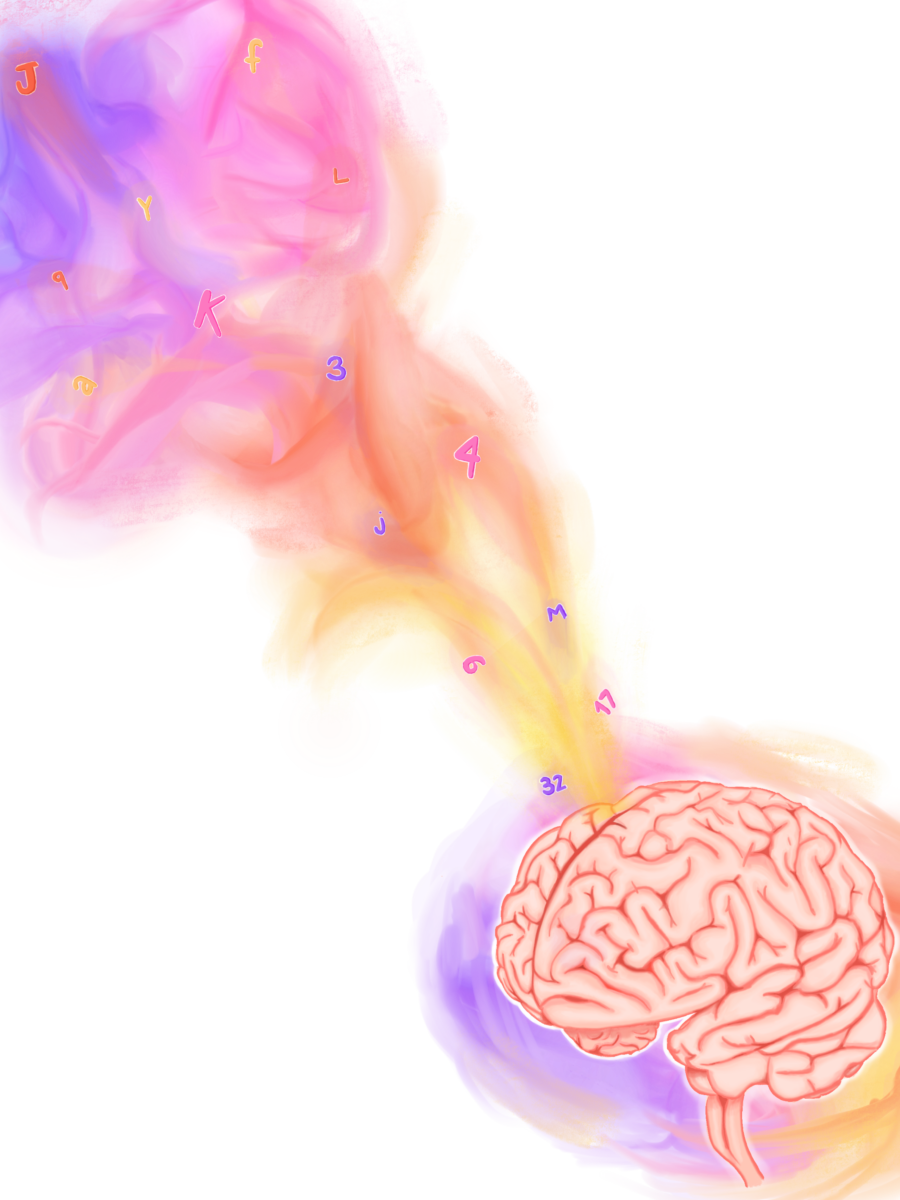

While individuals that acquire a second language beyond the sensitive period have more limited proficiency long-term, older language learners may make significant gains in the short-term. Second language acquisition later in life may come with specific challenges, but the brain retains mechanisms of neuroplasticity that allows that acquisition to occur [5]. The first language can also interfere with second language acquisition, and when specific sounds are unfamiliar in the first language, it is especially difficult for individuals to make the necessary distinctions in the second language [5]. Despite those challenges, adults may be able to apply knowledge from the first language to the second language if there is a significant amount of overlap between the languages. In fact, recent research suggests that adults even outperform children during the short-term when they are learning a second language with similar materials [6]. However, children have long-term advantages in second language acquisition. Data has shown that there is a rapid decline in second language learning ability at around seventeen to eighteen years old, specifically in the ability to learn and comprehend grammar. It is currently not clear exactly why this occurs, but it may be due to changes in neural maturation and plasticity, increased interference from the first language, or cultural and social factors, including the common transition towards a career occurring in late adolescence. [6]. Ultimately, the findings of this research contradicted earlier claims that the sharp decline in language learning capabilities occurs before adolescence, and this also offers more favorable implications for the acquisition of a second language in late adolescence and early adulthood.

Language acquisition within the sensitive period is a significant factor in typical childhood development; therefore, it is paramount that children receive adequate language exposure for lifelong language proficiency. Additionally, beyond this time period, the brain acquires language through the use of alternative mechanisms, which include right hemisphere involvement. The use of alternative mechanisms has a wide range of implications for second language learners, but data also suggests that second language acquisition later in life is still achievable. These findings provide valuable insight into human social behavior and communication mechanisms throughout the lifespan.