In the fall of 2025 Candler’s Office of Digital Learning offered a series of faculty workshops on artificial intelligence. It was important for us to be clear that a rapidly changing educational and technological environment, no conversation on generative AI will ever be complete. In the four months since we originally offered the “What is AI?” workshop, dozens of new tools, crises, and innovations have developed in the field.

So, rather than providing answers, we view our role as facilitators—creating spaces (both synchronously and asynchronously) where folks in higher ed can talk frankly about the ways that generative AI continues to shape our shared work.

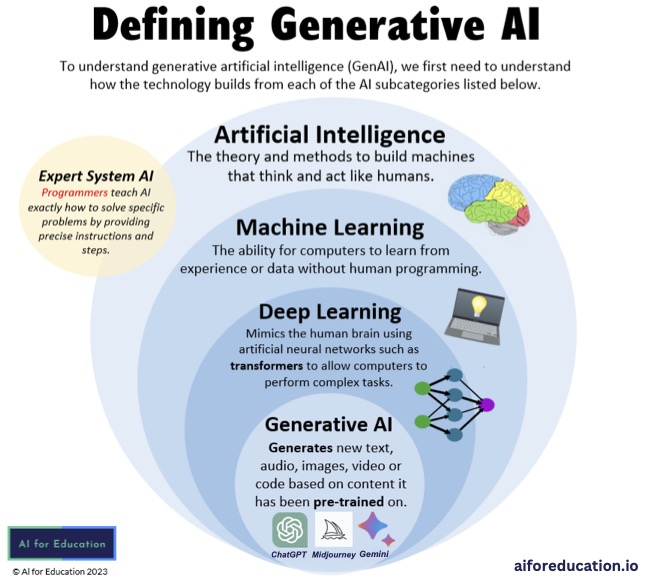

If we are going to have a conversation, though, we must get on the same page regarding terminology. When most of us think of “AI,” we are actually referring to generative AI tools that create new content. That content is based on materials that the tool has been provided (“trained on”). Does this mean the tool is thinking? Not really—it’s predicting. Based on exponentially large amounts of information, it is predicting what is the most likely (or, more precisely, the most “average”) response.

It’s also important to consider the degree to which our day-to-day activities are now enmeshed with AI tools. AI summaries are embedded in a search engines, LLMs (large language models like ChatGPT or CoPilot) are launched automatically in university accounts, and even academic databases provide AI analysis of texts that must be opted out of rather than into.

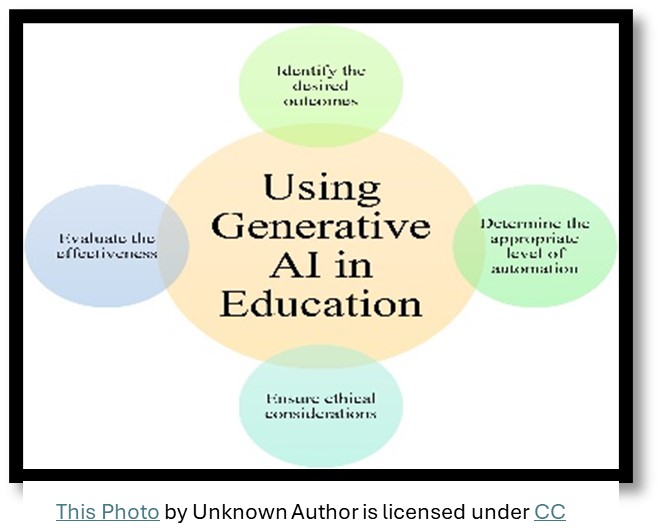

There are any number of ethical issues at stake in this conversation. Here are just a few:

- Access: most LLM users are not receiving the full power of the tool until the pay for upgraded access. AI tools (both assistive and generative) are already a part of almost every workforce. Familiarity with these tools will become necessary for certain vocations—and who has access to that training?

- Environmental Impact: Datacenters that are required to process this much information use huge quantities of natural resources. They also have harmful outputs and tend to be located in underserved communities. But, this is true of many industries. How can we use this conversation to be more aware of the large-scale impacts of our “normal” standards of living?

- Bias: Existing human biases are written into the data bank and magnified. Most of the information that trains LLMs is in English, and likely from an American perspective. Furthermore, as the LLM or algorithm gets to know your own preferences, it feeds those back to you with greater frequency.

At Candler, there are a number of faculty already experimenting with class assignments that include AI. In our required introductory MDiv class, students read Justo Gonzalez’s History of Theological Education to get a sense of the ways seminaries have developed over time. They discuss the (often competing) credential-focused and formation-focused aspects of the curriculum, and wrestled with the scope of MDiv requirements. Then, they write a paper on the. The following questions: What is theological education? What is it for? Whom is it for? Why should anyone take it up? Why are you taking it up? And what does the increasing presence of artificial intelligence in our world mean for those in and around the world of theological education?

What do you think the role of seminary is in an age of AI? Comment below and stay tuned for the next workshop summaries in this series!